The Fundamentals of Algorithm Efficiency

In the realm of computer science, understanding how quickly an algorithm performs is crucial. This is where time complexity and space complexity come into play, guiding programmers to optimize the efficiency of their code.

Decoding Complexity with Greek Letters

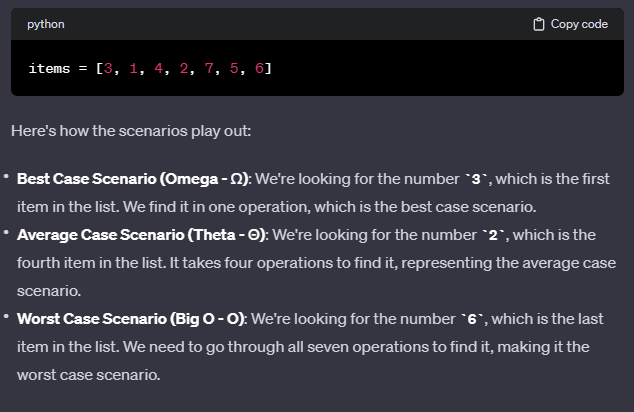

When discussing algorithm efficiency, three Greek letters emerge as the cornerstone: Omega (Ω), Theta (Θ), and Big O (Omicron or O). Each symbol represents different scenarios in the execution of an algorithm.

The Best Case, Average Case, and Worst Case Scenarios

Consider a list of seven items, where you need to find a specific number using a for loop. If the number you’re looking for is the first item, you’ve hit the best case scenario—represented by Omega (Ω), which requires only one operation. If the number is the last item, that’s the worst-case scenario, commonly referred to as Big O (O), requiring you to iterate through the entire list. And if you’re searching for a middle item, say the fourth, you’re looking at the average case, denoted by Theta (Θ).

The Misconceptions of Big O Notation

It’s a common misconception to refer to all time complexities as Big O. However, Big O specifically denotes the worst case scenario. The best and average cases are precisely depicted by Omega and Theta, respectively.

Why Algorithm Efficiency Matters

In software development, understanding these notations is essential to assessing how well an algorithm scales, particularly in large applications. This can impact the performance of everything from simple web apps to complex data analysis programs.

Conclusion

Time complexity is a fundamental concept that underpins effective algorithm design. By mastering the nuances of Omega, Theta, and Big O notations, developers can ensure that their code runs efficiently, regardless of the scenario, leading to more robust and reliable applications.